Box Embeddings: Paper Overviews

Tamanna Hossain-Kay / 2021-11-09

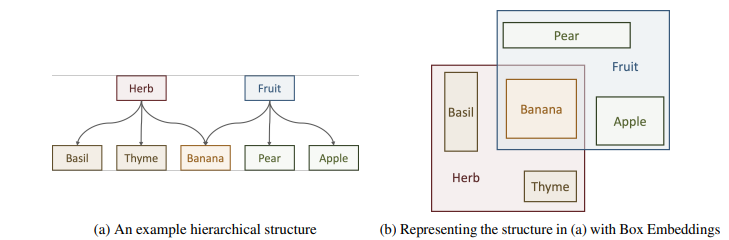

Geometric embeddings can be used to represent transitive asymmetric relationships. Box-embeddings are n-dimensional hyperrectangles that can represent such relationships by containment.

Formally, a “box” is defined as a Cartesian product of closed intervals,

Parameterization: may be the output from a neural network

Python package: Box Embeddings: An open-source library for representation learning using geometric structures

In order to learn box-embeddings, intersections and volumes of boxes need to be computed. This can be done in a few different ways:

- Hard Intersection + Hard Volume: Probabilistic Embedding of Knowledge Graphs with Box Lattice Measures (Vilnis et al., 2018)

- Hard Intersection + Soft Volume: Smoothing the Geometry of Probabilistic Box Embeddings (Li et al., 2018)

- Gumbel Intersection + Bessel Approx. Volume: Improving Local Identifiability in Probabilistic Box Embeddings (Dasgupta et al., 2020)

Read on for brief overviews of each method.

Probabilistic Embedding of Knowledge Graphs with Box Lattice Measures

Authors: Luke Vilnis, Xiang Li, Shikhar Murty, Andrew McCallum; University of Massachusetts Amherst

Method: Hard Intersection, Hard Volume

Defining Box operations

Note,

- While boxes are closed under intersection they’re not closed under union.

- ), but

Volume can be computed as,

where is the standard hinge function.

Volume can be interpreted as probability. For a concept associated with box ,

Learning WordNet

The transitive reduction of the WordNet noun hierarchy contains 82,114 entities and 84,363 edges.

The learning task is an edge classification task. Given a pair of nodes , the probability of existence of the edge is computed as

Gold label: Existence

Loss: Cross-entropy

Surrogate Intersection: When boxes do not intersect, the joint probability is 0 so parameters cannot be updated. In this case, a lower bound is used:

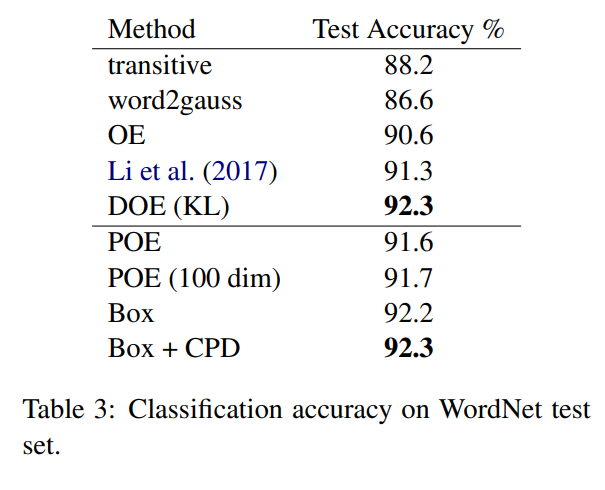

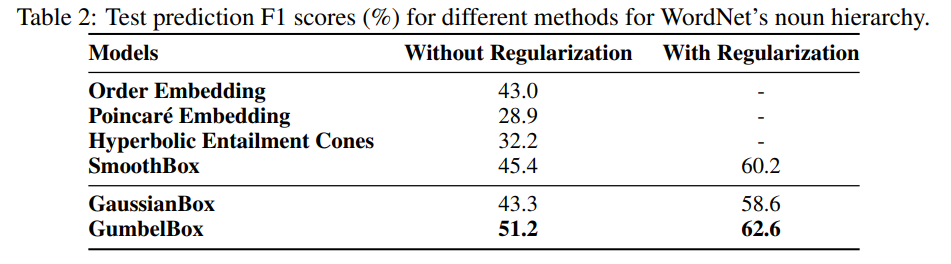

WordNet Results

Box-embeddings perform better than baselines in terms of classification accuracy. Refer to paper for details on baseline models.

Smoothing the Geometry of Probabilistic Box Embeddings

Authors: Xiang Li, Luke Vilnis,Dongxu Zhang, Michael Boratko, Andrew McCallum; University of Massachusetts Amherst

Method: Hard Intersection, Soft Volume

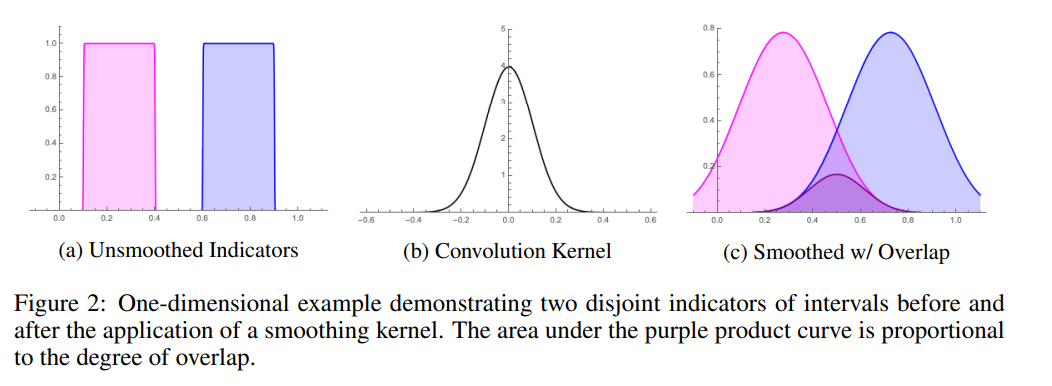

As mentioned in the previous paper (Vilnis et al., 2018), the gradient cannot flow when boxes disjoint. Vilnis et al., 2018 address this issue using a surrogate intersection probability. Here an alternative is proposed using using a Gaussian convolution with the original boxes to smooth the box edges into density functions.

Define boxes as indicator functions of intervals. Volume of boxes can then be expressed as integrals over the products of the indicator functions. Consider the 1D case for ease of exposition and results will generalize to higher dimensions.

The volume of the intersection of two boxes representing the intervals and (representing the joint probability between intervals) can be expressed as,

The smoothed version is,

where,

and

Integral Solution:

where , is the softplus function, the integral of the logistic sigmoid, and

Proof

Let , and note the identity from (Jebara et al., 2004; Vilnis & McCallum, 2015):

Then, Then with ,

Approximation

Multiplication of Gaussian-smoothed indicators have the property so for cases ,

- Idempotency requirement of bounded lattices is violated:

- Practically then if we are to treat the outputs of as probabilities, the consequence is

So we will approximate to get a version that is idempotent.

The first approximation follows from the approximation of by a logistic sigmoid given in Bowling et al. (2009).

However, it is still not idempotent except in the zero-temperature limit. Since as goes to zero, we recover the original formula with the hinge function which is idempotent,The limit

(From Wolfram Alpha) Two-sided limit does not exist.

Limit from the left: for

Limit from the right: for

The second approximation is needed to get a similar idempotent property when using the softplus fuinction as when using the hinge function,

Normalization

But finally, because softplus upper-bounds the hinge function, it is capable of outputting values that are greater than 1 , and therefore must be normalized.

Thus, in the multi-dimensional case we have:

where,

and = Global minimum and maximum in dimension.

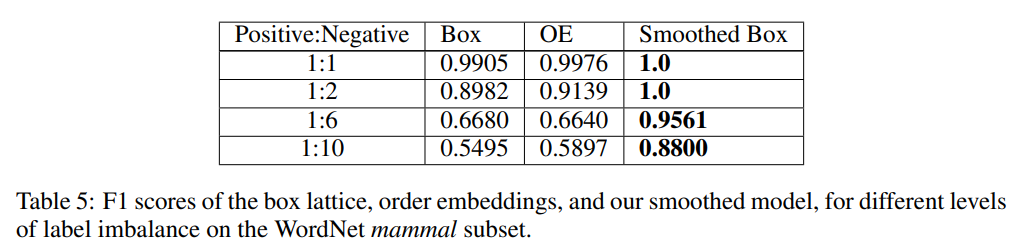

WordNet Results

Sparsity is increased (positive:negative ratio increased) in the data to create more disjoint cases. In sparser regimes, the smoothed box greatly outperforms the baselines (original hard box, and OE).

Improving Local Identifiability in Probabilistic Box Embeddings

Authors: Shib Sankar Dasgupta, Michael Boratko, Dongxu Zhang, Luke Vilnis, Xiang Lorraine Li, Andrew McCallum; University of Massachusetts, Amherst

Method: Gumbel Intersection, Bessel Approx. Volume

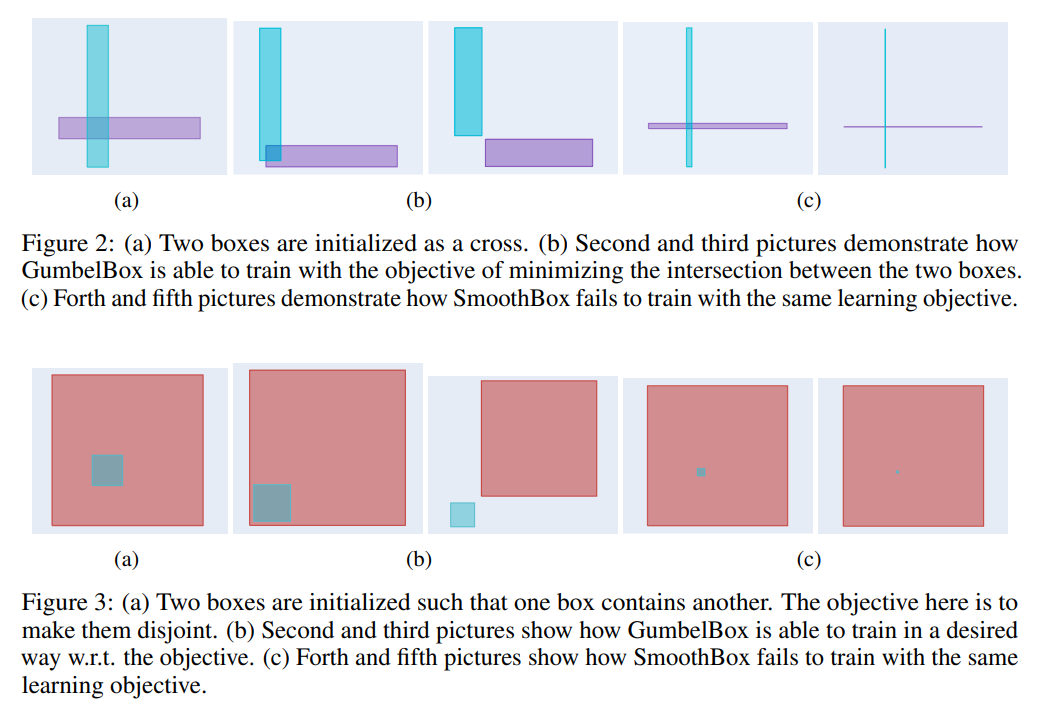

Both of the above proposed methods can have problems with local indentifiability since many different configurations of boxes can lead to the same joint probability value. This can lead to pathological parameter settings during train (Figure 2c; Figure 3c).

The method proposed here defines box edges as random variable, which leads to probabilities as expected volumes. This densifies the gradient of joint probabilities.

Gumbel Distribution

Since computing intersections of boxes involves computing minima and maxima, a min-max stable distribution is chosen: Gumbel distribution. This means that the maximum of multiple Gumbel random variables also follows a Gumbel distribution (MaxGumbel), and the minimum of multiple Gumbel random variables also follows a Gumbel distribution (MinGumbel).

Specifically,

If then and if , then

Gumbel-box process

For a probability space , with , the Gumbel-box process is generated as For a uniform base measure on , each is distributed according toIntersection

Volume Calculation

The expected length of an interval where and is given by where is the modified Bessel function of second kind, order

Proof

We note that this is equal to however this calculation can be continued, combining the integrals, as We integrate by parts, letting , where the portion made use of the substitution We have, therefore, that (12) becomes The first term goes to Setting the second term becomes With , this is a known integral representation of the modified Bessel function of the second kind of order zero, [4, Eq. 10.32.9], which completes the proof of proposition 1 .

Let Then the expected volume is, where is a set of variables taking the value 1, and and are the vectors of location parameters for the minimum and maximum coordinates of a .

Approximation

Computing conditional probabilities exactly requires the conditional expectation, which is intractable since it is a quotient. Instead, we approximate it by its first-order Taylor expansion, which takes the form of a ratio of two simple expectations:

Softplus Approximation

Due to extreme similarity of the exact volume to the softplus function, the following approximation is used:

WordNet Results

Gumbel boxes perform better than baselines on WordNet’s noun hierarchy in terms of F1 score. Refer to paper for details on baseline models.