Orthogonal Procrustes

Tamanna Hossain-Kay / 2021-06-28

I came across the Orthogonal Procrustes technique when reading the paper Diachronic Word Embeddings Reveal Statistical Laws of Semantic Change. It was used to align word embeddings from different runs of SVD (Singular Value Decomposition) and SGNS (Skipgram with Negative Sampling), since they can both result in arbitrary orthogonal transformations of word vectors.

So what is Orthogonal Procrustes?

Problem

Solution

Proof of Solution

Example

Problem

Given two matrices , find a matrix for mapping to . Specifically,

where denotes the Frobenius norm.

Solution

Let and be its factorization (SVD). Then the matrix for mapping to is .

Proof of Solution

This proof is based on the one found in Wikipedia: Orthogonal Procrustes problem with additional exposition for my own understanding:

But note that,

Thus,

But note that, is an orthogonal matrix since it is the product of orthogonal matrices: by the constraint, , due to the SVD factorization of . Then expression is maximised when equals the identity matrix .

Thus where is the solution for the optimal value of that minimizes the norm squared .

Example

Consider a 2D matrix , which represents an eighth note. The matrix (eighth_note.csv) along with all the code that will be used here to implement Orthogonal Procrustes can be found on Github. The repo also contains code used to generate the matrix, plus a matrix that represents a smiley face (smiley.csv) as an alternative :)

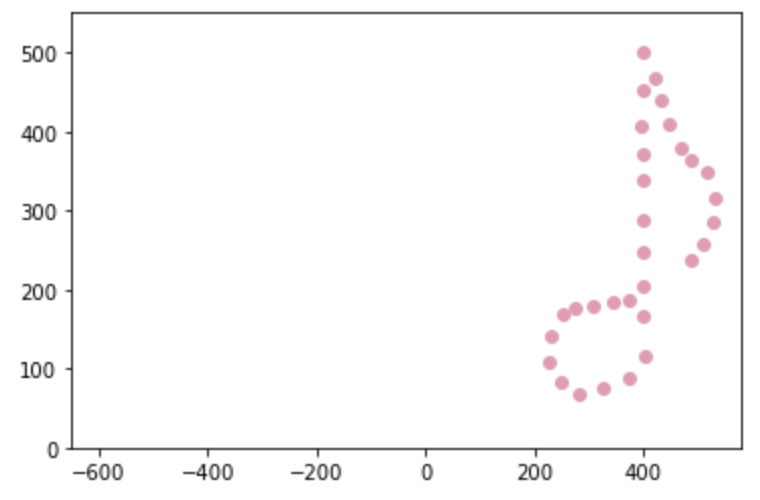

is plotted in pink below.

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

#Read data

A=pd.read_csv('eighth_note.csv')

A=A.to_numpy().transpose()

#Plot

plt.scatter(A.transpose()[:,0], A.transpose()[:,1], c='#ea99b2')

plt.xlim([-650,580])

plt.ylim([0,550])

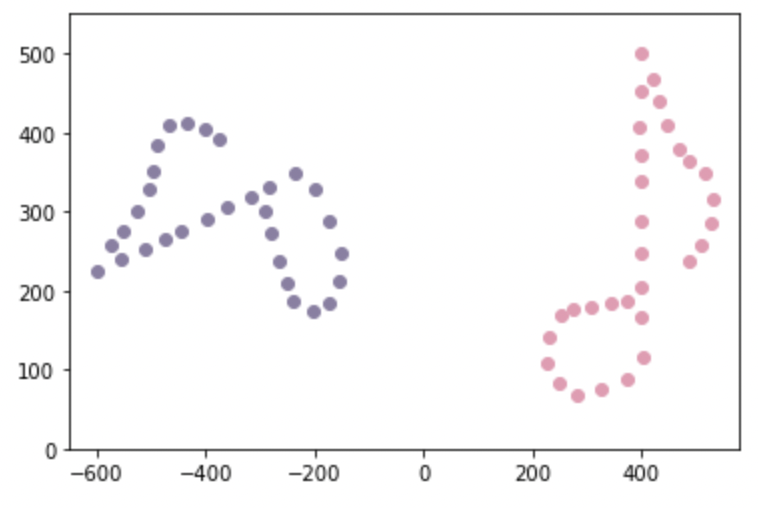

plt.show()Create a matrix , which is a rotation of with some noise. is plotted in lavender below.

#Create B

theta = np.pi * 0.6

R_known = np.array([[np.cos(theta), -np.sin(theta)],[np.sin(theta), np.cos(theta)]])

epsilon = 0.01 # noise

B = np.dot(R_known,A) + epsilon * np.random.normal(0,1, A.shape)

#Plot

plt.scatter(A.transpose()[:,0], A.transpose()[:,1], c='#ea99b2')

plt.scatter(B.transpose()[:,0], B.transpose()[:,1], c='#8b7fa4')

plt.xlim([-650,580])

plt.ylim([0,550])

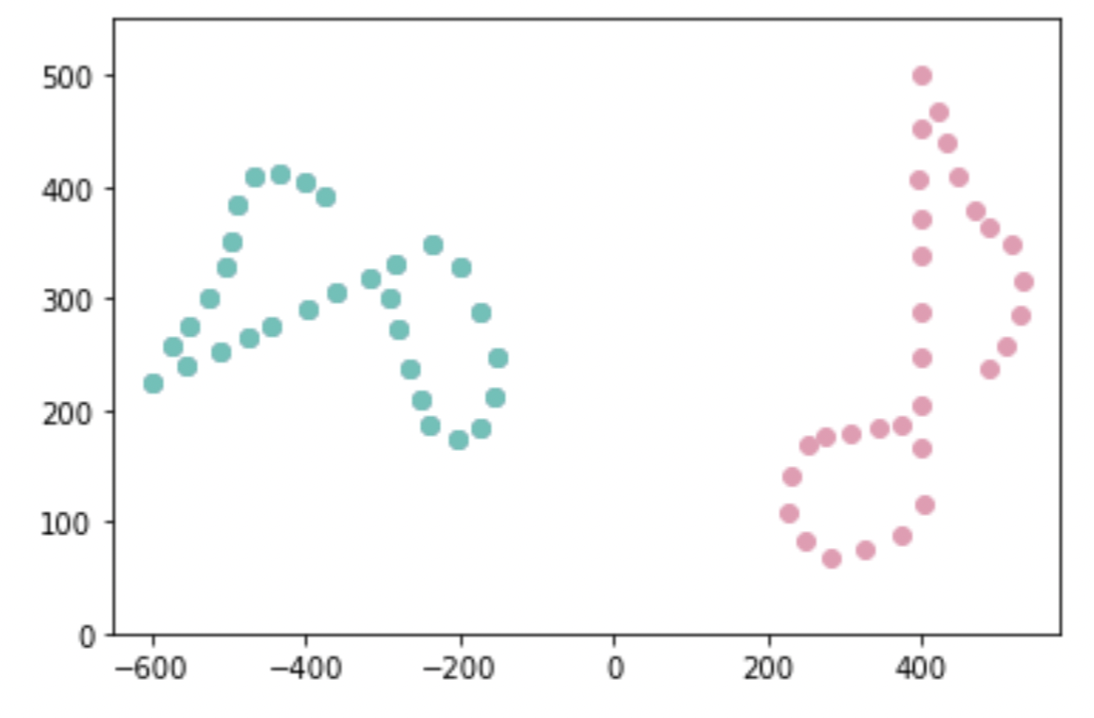

plt.show()Now Orthogonal Procrustes is used to find a matrix which will allow for a mapping from to .

#Find orthogonal matrix

from numpy.linalg import svd

M=np.dot(B,A.transpose())

u,s,vh=svd(M)

R=np.dot(u,vh)Transform using to map it to . The transformed matrix .

is plotted using cyan below: it overlaps so closely that we can’t see the lavendar plot of B underneath.

# Mapped matrix

new_A=np.dot(R,A)

from matplotlib import pyplot as plt

plt.scatter(B.transpose()[:,0], B.transpose()[:,1], c='#8b7fa4')

plt.scatter(A.transpose()[:,0], A.transpose()[:,1], c='#ea99b2')

plt.scatter(new_A.transpose()[:,0], new_A.transpose()[:,1], c='#55c3b8')

plt.xlim([-650,580])

plt.ylim([0,550])

plt.show()